Why Learning-focused Monitoring, Evaluation, and Learning is the Best Investment You Can Make Right Now

In a constrained funding environment, monitoring, evaluation, and learning (MEL) that truly focuses on learning—that is, generating the right data to make timely, informed decisions that improve programs and increase impact—is not optional. It is the smartest investment to make for cost-effective impact. Unfortunately, most MEL systems today are broken, built primarily for accountability instead of learning required for developing and scaling effective solutions. It’s time to change that—and funders hold a special responsibility.

The problem: A broken MEL system

Consider this common scenario: An organization launches a new education program with a detailed long list of indicators that focus on meeting funder requirements and tracking outputs rather than understanding what’s working. Two years and countless spreadsheets later, the program has not improved. The data sits in reports no one uses to make decisions. Meanwhile, the program team suspects certain activities are not working but has no time to investigate because they are too busy collecting more data for the next report.

Under this scenario, MEL becomes a compliance exercise rather than a strategic investment to guide program improvement and decision-making.

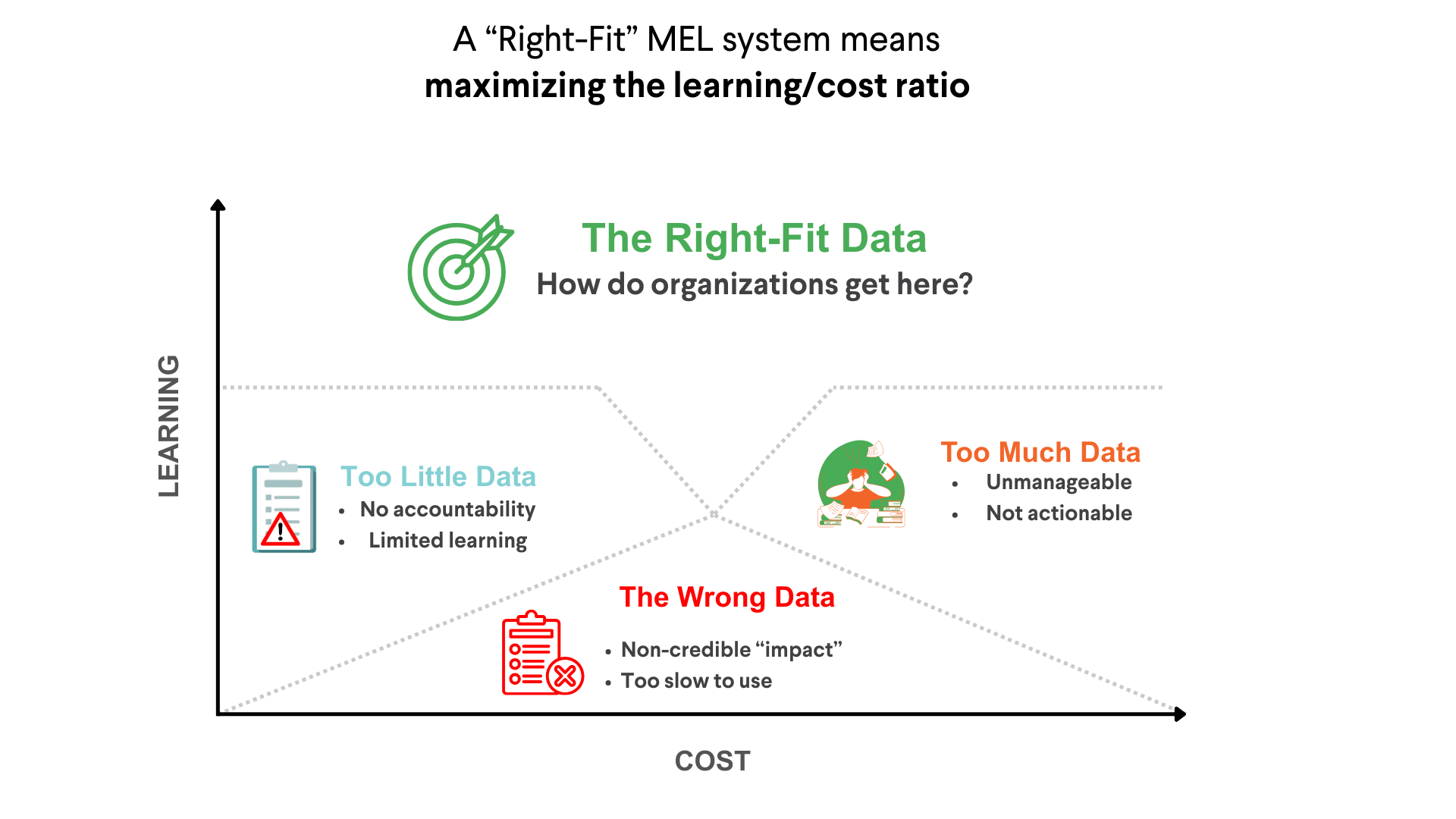

This broken system leaves organizations in one of three places:

- Too much data collected, not all of it used

- Too little data collected, used only to comply with pre-defined reporting indicators

- The wrong data being collected, used for misleading claims

Organizations end up feeling trapped: If they do not use the data they collect beyond reporting, it is wasteful. If they do use it, it can be misleading. What should they do?

The solution: IPA’s approach to learning-focused MEL

Learning-focused MEL turns this dynamic around. Instead of using data solely for reporting on indicators that do not produce valuable insights, it generates the right data to improve programs and increase impact.

This approach is grounded in Dean Karlan and Mary Kay Gugerty’s Goldilocks Challenge book, which calls for investing in right-fit evidence—aligning learning approaches with a program’s level of maturity.

IPA established the Right-Fit Evidence (RFE) Unit in 2017 to act on this call. At RFE, we start with one question: What does this organization need to learn right now? The answers are informed by:

- The decisions that the learning activities will inform

- The program’s level of maturity

- The most cost-effective approaches to generate that learning

This approach is transformative: help organizations use evidence to learn, adapt, and amplify their impact.

What learning-focused MEL looks like in practice

Three core principles guide this approach:

The learning-to-cost ratio: Before collecting any data, ask: How will the data be used? Will it inform a specific decision or support program improvement? Data collection costs should match learning value. Prioritization is the superpower here: Focus on data collection that yields the most learning value.

Early outcomes as the learning sweet spot: Programs can measure changes in knowledge, attitudes, practices, or behaviors long before final outcomes. These early indicators move quickly, provide actionable signals to refine interventions, and help determine whether a program is ready for an impact evaluation.

A leadership-led learning culture: Learning-focused MEL works when leadership values evidence-based decisions, creating space for teams to invest in learning and act on findings. This means celebrating failures and bravely making course corrections.

In practice, these principles translate into three concrete actions:

Right-fit data collection: Collect data in the same proportion you analyze it, and analyze it in the same proportion you use it for decisions or learning. Prioritize data that yields the most learning value.

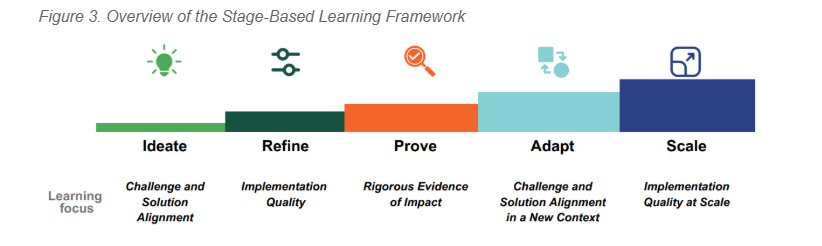

Stage-based approaches: Match methodology to program stage: Ideas need prototyping, pilots need rapid feedback loops, more mature programs need rigorous impact estimates, and programs operating at scale need implementation quality data. Not everything needs a randomized controlled trial (RCT). Jumping straight to an impact evaluation before ironing out the kinks wastes resources and often leads to disappointing results.

Collaboration between MEL and program teams: MEL teams must work hand in hand with program teams as true partners, collaborating not only to generate relevant data, but also to inform decisions. MEL cannot be siloed in a separate department.

The funders' role

Implementing partners can’t transform MEL alone.

Funders have a special role in stewarding this shift. Funder reporting requirements create incentives and shape how evidence is collected, used, and valued, unlocking a different kind of accountability; one that rewards learning, adaptation, and transparency.

Funders can and should:

- Design grants for learning, not just delivery, and allocate time and budget for iterative testing;

- Match methodology to stage, funding rapid feedback methods when developing new interventions, and funding rigorous evaluations when programs mature; and

- Shift requirements to prioritize reflection and adaptation over reporting on pre-defined indicators.

When funders push programs to expand before learning what works, or demand impact evaluations before programs have refined their approach, they contribute to the broken system.

To learn more about the role funders play in this shift, read our publication, Enabling Stage-Based Learning: A Funder's Guide to Maximizing Impact.

RFE’s journey: Lessons from our work

At RFE, we have embedded these principles in our advisory work with more than 100 partners in over 20 countries over the past eight years, working across Africa, Latin America, and beyond, in both development and humanitarian contexts.

Our portfolio has evolved from supporting specific programs to influencing larger initiatives and partnering with funders and implementers on their entire MEL systems. RFE has worked with:

- Global and regional foundations, including The LEGO Foundation in Africa, and VélezReyes+ in Latin America, to structure portfolio-wide learning systems and strategic investment priorities.

- Implementers across Latin America, Africa, and Asia, like BRAC, Fundación Juanfe, Ubongo, Ei Mindspark, and One Acre Fund, to embed learning-focused MEL into their programming through designing learning plans, providing technical assistance, building A/B testing systems, conducting user research and testing, among many other forms of technical MEL advisory.

- Governments, including Ghana's Ministry of Education and Colombia’s Early Childhood Agency, to embed learning-focused MEL in their operations through capacity-building and technical support, in some cases through IPA's Embedded Evidence Labs.

Across these experiences, one truth remains constant: prioritizing learning is a smart investment.

To learn more about RFE's work, visit our website.

Looking ahead: Why this matters now more than ever

Development is now facing acute financial constraints, raising the risk that the field will double down on a broken MEL system—one that prioritizes accountability at the expense of investing in learning.

Programs embedding learning-focused MEL catch problems early and adapt quickly. They avoid wasting resources on approaches that are not working or on unnecessary and expensive data collection that does not match their learning needs.

Technology is making learning cheaper and faster, and AI is rapidly transforming what is possible and how learning and decision-making happen. Digital dashboards can now show program teams which activities are working within minutes. Mobile data collection captures participant feedback at a fraction of traditional survey costs. And AI-powered tools can analyze large volumes of qualitative data in seconds.

But, technology and AI need to be anchored within strong, right-fit learning and data systems—building capacity and improving data infrastructure—so that organizations can test more, learn faster, and adapt with confidence, all while reducing the cost per learning cycle through right-fit approaches.

As funding tightens, and technology and AI accelerate, the question is not whether organizations can afford to invest in learning; it’s whether we can afford not to.

This blog launches a series on learning-focused MEL in practice. Stay tuned for upcoming posts.